Visual Reinforcement Learning with

Self-Supervised 3D Representations

Abstract

A prominent approach to visual Reinforcement Learning (RL) is to learn an internal state representation using self-supervised methods, which has the potential benefit of improved sample-efficiency and generalization through additional learning signal and inductive biases.

However, while the real world is inherently 3D, prior efforts have largely been focused on leveraging 2D computer vision techniques as auxiliary self-supervision.

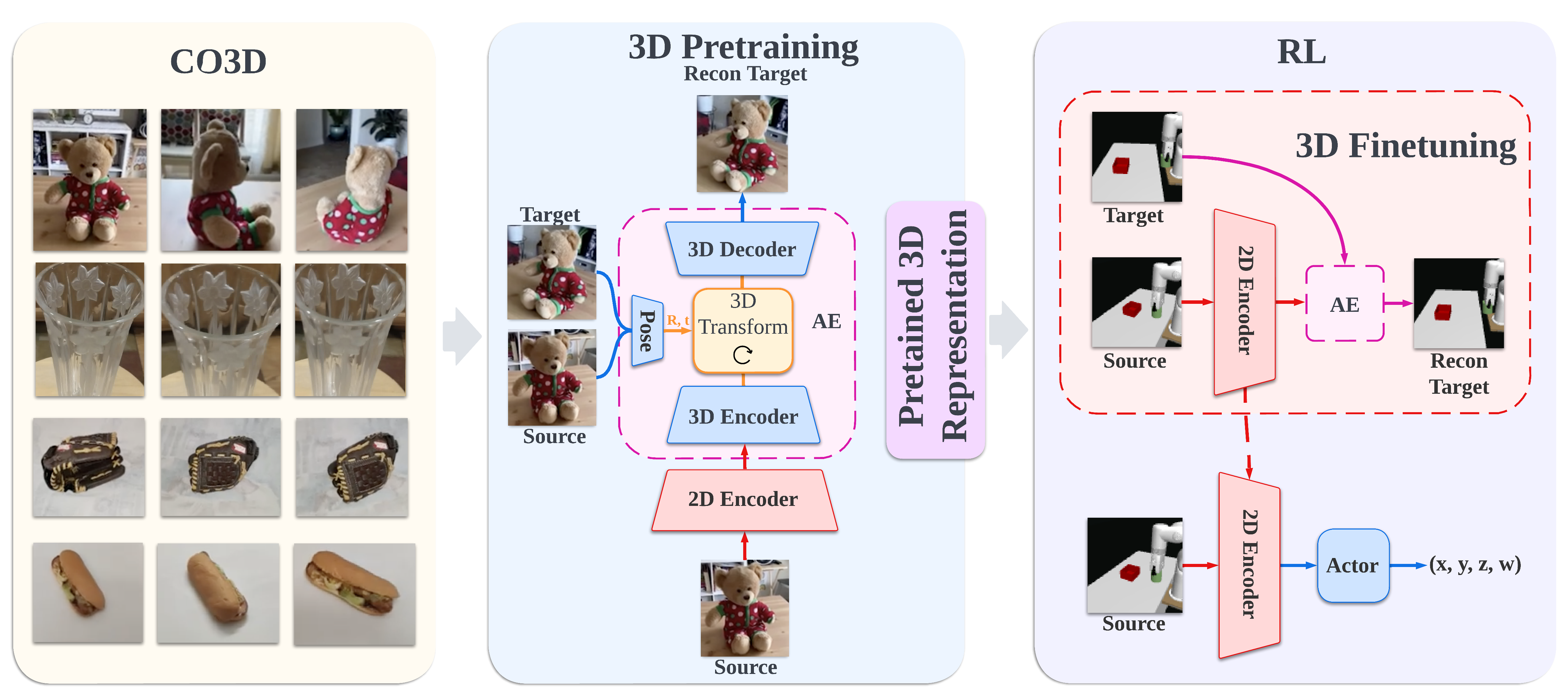

In this work, we present a unified framework for self-supervised learning of 3D representations for motor control. Our proposed framework consists of two phases:

a pretraining phase where a deep voxel-based 3D autoencoder is pretrained on a large object-centric dataset, and a finetuning phase where the representation is jointly finetuned together with RL on in-domain data.

We empirically show that our method enjoys improved sample efficiency in simulated manipulation tasks compared to 2D representation learning methods.

Additionally, our learned policies transfer zero-shot to a real robot setup with only approximate geometric correspondence and uncalibrated cameras, and successfully solve motor control tasks that involve grasping and lifting from a single RGB camera.

Video

Method Overview

Visualization of Static View and Dynamic View

Lift

Static Dynamic

Push

Static Dynamic

Peg in Box

Static Dynamic

Reach

Static Dynamic

Sim-to-Real Results

Sim-to-Perturbed-Real Results

Sim-to-Perturbed-Real Results

We perturb the real world environments including the camera position, the camera orientation, the lighting, and the background, to evaluate the robustness of our method.

Novel Real View Synthesis

We use the images from the real world to generate the synthetic images, though our method is only trained in simulation. We observe that the main scene structure is maintained, but the quality is not as good as the real world, partially caused by the joint training of 3D and RL.

Real Input

Synthesis (30°)

Real Input

Synthesis (30°)

Real Input

Synthesis (30°)

Real Input

Synthesis (30°)

Citation

@article{Ze2022rl3d,

title={Visual Reinforcement Learning with Self-Supervised 3D Representations},

author={Yanjie Ze and Nicklas Hansen and Yinbo Chen and Mohit Jain and Xiaolong Wang},

journal={IEEE Robotics and Automation Letters (RA-L)},

year={2023},

}